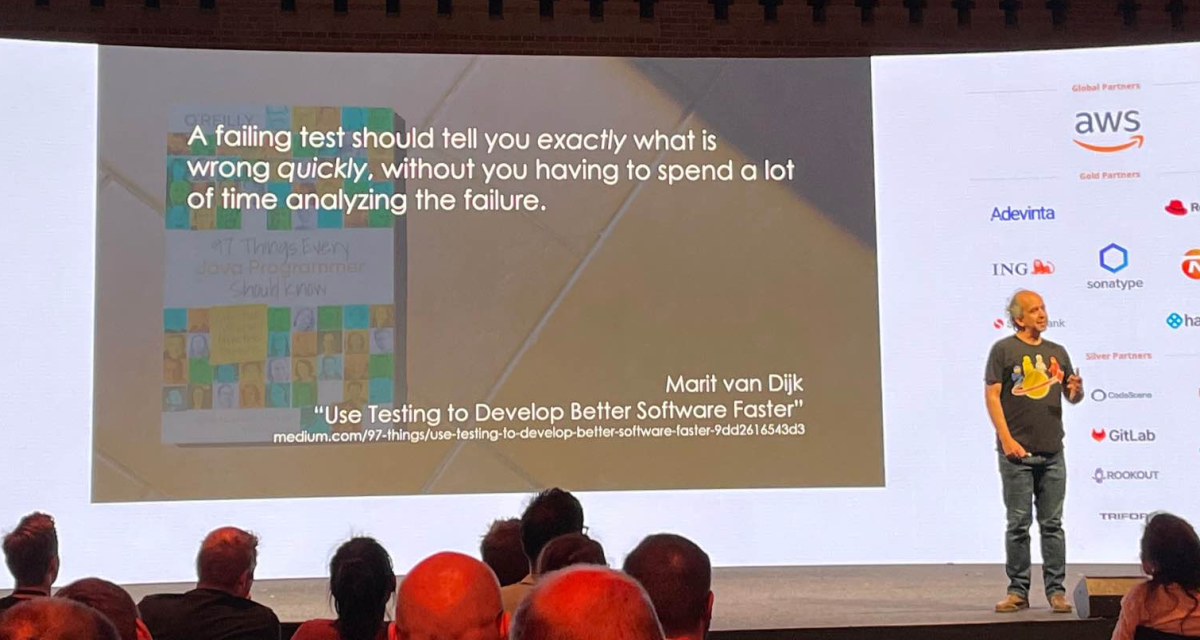

This blog post was first published on the 97 Things blog on Medium and is published in the book “97 Things Every Java Programmer Should Know” (O’Reilly Media).

Testing your code will help you verify your code does what you expect it to do. Tests will also help you to add, change, or remove functionality, without breaking anything. But testing can have additional benefits.

Merely thinking about what to test will help to identify different ways the software will be used, discover things that are not clear yet, and better understand what the code should (and shouldn’t) do. Thinking about how to test these things before even starting your implementation could also improve your application’s testability and architecture. All of this will help you build a better solution before tests and code are written.

Alongside the architecture of your system, think not only about what to test but also where to test. Business logic should be tested as close as possible to where it lives: unit tests to test small units (methods and classes); integration tests to test the integration between different components; contract tests to prevent breaking your API; etc.

Consider how to interact with your application in the context of a test and use tools designed for that particular layer, from unit test (e.g., JUnit, TestNG), to API (e.g., Postman, RestAssured, RestTemplate), to UI (e.g., Selenium, Cypress).

Keep the goal of a particular test type in mind and use the tools for that purpose, such as Gatling or JMeter for performance tests, Spring Cloud Contract Testing or Pact for contract testing, and PiTest for mutation testing.

But it is not enough to just use those tools: They should be used as intended. You could take a hammer to a screw, but both the wood and the screw will be worse off.

Test automation is a part of your system and will need to be maintained alongside production code. Make sure those tests add value and consider the cost of running and maintaining them.

Tests should be reliable and increase confidence. If a test is flaky, either fix it or delete it. Don’t ignore it — you’ll waste time later wondering why that test is being ignored. Delete tests (and code) that are no longer valuable.

A failing test should tell you exactly what is wrong quickly, without you having to spend a lot of time analyzing the failure. This means:

- Each test should test one thing.

- Use meaningful, descriptive names. Don’t just describe what the test does either (we can read the code), tell us why it does this. This can help decide whether a test should be updated in line with changed functionality or whether an actual failure that should be fixed has been found.

- Matcher libraries, such as HamCrest, can help provide detailed information about the difference between expected and actual result.

- Never trust a test you haven’t seen fail.

Not everything can (or should) be automated. No tool can tell you what it’s actually like to use your application. Don’t be afraid to fire up your application and explore; humans are way better at noticing things that are slightly “off” than machines. And besides, not everything will be worth the effort of automating.

Testing should give you the right feedback at the right time, to provide enough confidence to take the next step in your software development life cycle, from committing to merging to deploying and unlocking features. Doing this well will help you deliver better software faster.